Federated learning: attack simulation

In this notebook, we provide a simulation of a simple federated data poisoning attack. First, we will use a simple approach that consists of shuffling the training labels of some clients, which will become adversarial.

This notebook presents the class FederatedDataAttack in federated_attack.py, whose goal is to implement any attack on the federated data.

For more information about basic federated learning concepts, please refer to the notebook Federated learning basic concepts.

For this simulation, we use the Emnist digits dataset.

from shfl.data_base import Emnist

from shfl.data_distribution import NonIidDataDistribution

import numpy as np

import random

database = Emnist()

train_data, train_labels, test_data, test_labels = database.load_data()2022-04-26 14:17:22.233405: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-04-26 14:17:22.233425: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.Now, we distribute the data among the client nodes using a non-IID distribution over 10% of the data.

noniid_distribution = NonIidDataDistribution(database)

nodes_federation, test_data, test_labels = noniid_distribution.get_nodes_federation(num_nodes=20, percent=10)At this point, we are ready to apply a data attack to some nodes.

For this simulation, we choose to apply data poisoning to the 20% of the nodes.

To do so, we use the class FederatedPoisoningDataAttack, which simulates data poisoning in a certain percentage of the nodes.

We create a FederatedPoisoningDataAttack object with the percentage set to 20% and apply the attack over nodes_federation.

from shfl.private.federated_attack import FederatedPoisoningDataAttack

random.seed(123)

simple_attack = FederatedPoisoningDataAttack(percentage=20)

simple_attack(nodes_federation = nodes_federation)We can get the adversarial nodes in order to show the applied attack.

adversarial_nodes = simple_attack.adversaries

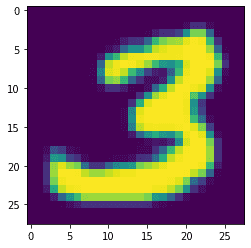

adversarial_nodes[1, 8, 2, 13]In order to show the effect of the attack, we select one adversarial client and an index position and show the data and the label associated with this image. We change data access protection (see NodesFederation), in order to access the data. Due to the nature of the data poisoning (random shuffle), it is possible that for some specific data, the label will match, but in most cases it will not.

import matplotlib.pyplot as plt

from shfl.private.utils import unprotected_query

adversarial_index = 0

data_index = 10

nodes_federation.configure_data_access(unprotected_query);

plt.imshow(nodes_federation[adversarial_nodes[adversarial_index]].query().data[data_index])

print(nodes_federation[adversarial_index].query().label[data_index])[0. 0. 0. 0. 0. 1. 0. 0. 0. 0.]

At this point, we can train a FL model among these clients (adversarial and regular) using a specific aggregation operator.