Differential privacy: binary average attack

The basic idea of differential privacy is to make a particular query stochastic so that the underlying data is kept private. The average attack consists of performing the same query many times, in order to reliably estimate the underlying data. This is, of course, not desirable; so we should either limit the number of queries or design algorithms that are not vulnerable to this kind of attack.

In this notebook, we present a simple example of this phenomenon, based on a single node that contains one binary number that encodes whether the node is guilty () or innocent (). A randomized response algorithm (see The Algorithmic Foundations of Differential Privacy) is used to query the node, in order to preserve the privacy of . Moreover, the use of Adaptive Differential Privacy for privacy protection is illustrated.

Index

1) Average attack simulation

We start by creating a single node that contains a binary number. In this case, we set this number to 1 (guilty). By setting f1=0.8, we make sure that 80% of the times we query the node whose data is 1, we get an answer of 1. In the remaining 20% of the cases we obtain an answer of 0.

import numpy as np

from shfl.private.node import DataNode

from shfl.differential_privacy.mechanism import RandomizedResponseBinary

from math import log, exp

import matplotlib.pyplot as plt

n = 1 #the node is guilty

node_single = DataNode()

node_single.set_private_data(name="guilty", data=np.array([n]))

data_access_definition = RandomizedResponseBinary(f0=0.8, f1=0.8, epsilon=log(4) + 1e-10)

node_single.configure_data_access("guilty", data_access_definition)2022-03-24 14:16:27.375645: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-03-24 14:16:27.375665: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

/home/f.palomino/Desktop/venvpruebas/environment/lib/python3.8/site-packages/tqdm/auto.py:22: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdmIf we perform the query just once, we cannot be sure that the result matches the true data.

result = node_single.query(private_property="guilty")

print("The result of one query is: " + str(int(result)))The result of one query is: 1If we perform the query times and take the average, we can estimate the true data with an error that goes towards zero as increases.

N = 500

result_query = []

for i in range(N):

result_query.append(node_single.query(private_property="guilty"))

result_query = np.array(result_query)

print(np.mean(result_query))0.778We see that the average result of the query is close to 0.8. This allows us to conclude that the raw answer is most likely 1. Otherwise, the result would have been close to 0.2.

2) Permanent randomized response

A possible solution to this problem (e.g. see RAPPOR technology and Jiang 2019) is to create a node that contains two pieces of information: the true data and a permanent randomized response (PRR). The latter is initialized to None and, once the node receives the first query, it creates a random binary number following the algorithm described above, which is saved as the PRR. The result of the query is then a randomized response using the PRR as input. This way, even if the query is performed a large number of times, the attacker can only guess the PRR with certainty, not the true data.

node_single_prr = DataNode()

data_binary = np.array([1]) #the node is guilty

node_single_prr.set_private_data(name="guilty", data=np.array([n]))

node_single_prr.configure_data_access("guilty", data_access_definition)

permanent_response = node_single_prr.query(private_property="guilty")

print("The PRR is: " + str(int(permanent_response)))

# we save the prr as a new piece of information

node_single_prr.set_private_data(name="guilty", data=np.append(data_binary, permanent_response))The PRR is: 1From now on, all the external queries are done over the permanent randomized data, while the raw data remains completely hidden.

N = 500

result_query = np.empty(shape=(N,))

for i in range(N):

result_query[i] = node_single_prr.query(private_property="guilty")[1]

print(np.mean(result_query))0.794The result is not always close to 0.8, since the permanent response might be 0. The average attack may, at best, identify the permanent randomized response, but not the raw data.

3) Adaptive differential privacy

Adaptive Differential Privacy is a different approach to managing multiple queries against the same data. The basic idea consists of registering all the interactions until a global epsilon is spent. Then, making more queries to the data becomes forbidden, as the privacy budget limits it. Let's experiment with different values of epsilon.

from shfl.differential_privacy.composition import AdaptiveDifferentialPrivacy

from shfl.differential_privacy.composition import ExceededPrivacyBudgetError

global_epsilon_delta = (7, 0)

def experiment(global_epsilon_delta):

node_single = DataNode()

node_single.set_private_data(name="guilty", data=np.array([n]))

data_access_definition = AdaptiveDifferentialPrivacy(global_epsilon_delta)

differentially_private_mechanism = RandomizedResponseBinary(f0=0.8, f1=0.8, epsilon=log(4) + 1e-10)

node_single.configure_data_access("guilty", data_access_definition)

N = 500

result_query = []

for i in range(N):

try:

result_query.append(node_single.query(private_property="guilty",

mechanism=differentially_private_mechanism))

except ExceededPrivacyBudgetError:

print("The privacy budget has been spent at run {}".format(i+1))

break

average = []

mean_queries = []

for i in range(0,len(result_query)):

average.append(result_query[i])

summatory = 0

for j in range(0,len(average)):

summatory += average[j]

mean_queries.append((summatory/(i+1)))

return result_query,mean_queries

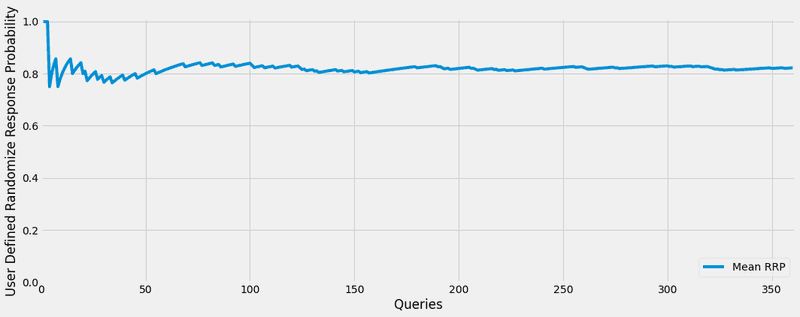

_,_ = experiment(global_epsilon_delta)The privacy budget has been spent at run 6We can see that the privacy budget with an of 7 has been spent early, but what if we increase this value? As discussed earlier, we can see that increasing the value leads to the estimation of the permanent randomized response value.

global_epsilon_delta = (500, 0)

result_query, mean_queries = experiment(global_epsilon_delta)The privacy budget has been spent at run 361plt.style.use('fivethirtyeight')

fig, ax = plt.subplots(nrows=1, ncols=1, figsize=(16,6))

k_values = np.arange(1, len(result_query)+1, 1)

ax.plot(k_values, mean_queries, label = "Mean RRP")

ax.set_xlabel('Queries')

ax.set_ylabel('User Defined Randomize Response Probability')

ax.set_xlim([0., len(result_query)+1])

ax.set_ylim([0, 1.01])

plt.legend(title = "", loc="lower right")

plt.show()

What we can conclude with this graph, is that we do not allow the user to keep sending queries. This way, the data is protected with more robustness.