Federated learning: sampling methods

Sherpa.ai Federated Learning Framework provides methods to easily set up a federated learning experiment. In particular, it is possible to simulate a federated setting by distributing a dataset over federated clients. In the present notebook, this functionality is demonstrated both for the independent and identically distributed (IID) and the Non-IID cases. With these methods, we will show how do they work and the resultant datasets' characteristics.

First of all, we will load the Emnist dataset and we will perform all these operations, as a large multi-label dataset will represent reality scenarios accurately.

import matplotlib.pyplot as plt

import numpy as np

import shfl

from shfl.auxiliar_functions_for_notebooks.functionsFL import *

from shfl.data_base.emnist import Emnist

from shfl.data_distribution.data_distribution_iid import IidDataDistribution

from shfl.data_distribution.data_distribution_non_iid import NonIidDataDistribution

database = Emnist()

train_data, train_labels, test_data, test_labels = database.load_data()2022-03-21 11:49:39.332705: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-03-21 11:49:39.332721: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.Let's inspect some properties of the loaded data.

print(len(train_data))

print(len(test_data))

print(type(train_data[0]))

train_data[0].shape240000

40000

<class 'numpy.ndarray'>

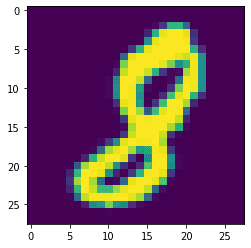

(28, 28)So, as we have seen, our dataset is composed of a set of matrices that are 28 by 28. Before starting with the federated scenario, we can take a look at a sample of the training data.

plt.imshow(train_data[0])<matplotlib.image.AxesImage at 0x7fcf0226d8b0>

IID federated sampling

In the IID scenario, each node has independent and identically distributed access to all observations in the dataset.

The only available choices are:

- Percentage of the dataset used

- Number of instances per node

- Sampling with or without replacement

Percentage of the dataset used in an IID scenario. The percent parameter indicates the percentage of the total number of observations in the datased, split across the different clients. Since the subset is chosen randomly, it statistically becomes representative and follows the same distribution of the whole dataset. Obviously, it can not be greater than 100 nor less than 0.

Number of instances per node in an IID scenario. The weight parameter indicates the deterministic distribution of the number of samples per node, as a ratio over the total number of observations in the dataset used for the simulation. For instance, weights = [0.2, 0.3, 0.5] means that the first node will be assigned 20% of the total number of observations in the dataset used, the second node, 30% and the third node, 50%.

Note that the weight parameter does not necessarily sum up to one, because of the option of sampling. We specify this fact below.

Sampling with or without replacement

The sampling parameter, which can have one of the following two values, 'with_replacement' or 'without_replacement', indicates if an observation assigned to a particular node is removed from the dataset pool and will therefore be assigned only once (weight = 'without_replacement'); or will be returned to the dataset pool and can therefore be selected for a new assignation (weight = 'with_replacement').

Combinations of the weights and sampling parameters: sampling = 'without_replacement'

When sampling = 'without_replacement', the total number of samples assigned to the nodes can not be greater than the number of available observations in the dataset. This imposes the constraint on the weights parameter that the sum of the weights values must be equal to or lesser than one. If they are not, the weights will be normalized to sum one. The possible cases are:

- If the sum of the weights values is less than one when sampling = 'without_replacement', then the resulting distribution of observations to the nodes (the union of the nodes' sets of samples) is a subset of the raw dataset of the whole percentage used.

iid_distribution = IidDataDistribution(database)

nodes_federation, test_data, test_label = iid_distribution.get_nodes_federation(num_nodes=3, percent = 50,

weights=[0.1,0.2,0.3])

print(type(nodes_federation))

print(nodes_federation.num_nodes())

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);<class 'shfl.private.federated_operation.NodesFederation'>

3

Number of instances per client:

12000

24000

36000- If the sum of the weights values is equal to one when sampling = 'without_replacement', then the resulting distribution of observations to the nodes (the union of the nodes' sets of samples) is exactly the raw dataset, that is, the distributed samples conform a partition of the original dataset.

iid_distribution = IidDataDistribution(database)

nodes_federation, test_data, test_label = iid_distribution.get_nodes_federation(num_nodes=3, percent = 50,

weights=[0.3,0.3,0.4])

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);Number of instances per client:

36000

36000

48000- If the sum of the weights values is greater or lesser than one when sampling = 'without_replacement', then the weights values will be normalised to sum up to one. For instance, giving sampling = 'without_replacement' and weights = [0.2, 0.3, 0.7] the sum of the weights values is 1.2 > 1, and therefore, the effective weights values will result from the normalization: weights = [0.2/1.2, 0.3/1.2, 0.7/1.2].

iid_distribution = IidDataDistribution(database)

nodes_federation, test_data, test_label = iid_distribution.get_nodes_federation(num_nodes=3, percent = 50,

weights=[0.2,0.3,0.7])

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);Number of instances per client:

20000

30000

70000Combinations of the weights and sampling parameters: sampling = 'with_replacement'

When sampling = 'with_replacement', the total number of samples assigned to the nodes can be greater or lesser than the number of available observations in the dataset. This removes any constraint on the weights parameter values. The resulting distribution of samples across the nodes are subsets of the original dataset that could share observations. Also, each node could have zero, one or more than one samples of a given observation.

iid_distribution = IidDataDistribution(database)

nodes_federation, test_data, test_label = iid_distribution.get_nodes_federation(num_nodes=3, percent = 50,

weights=[0.5,0.3,0.7],

sampling = "with_replacement")

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);Number of instances per client:

60000

36000

84000Non-IID federated sampling

In contrast to the IID. scenario, where the concept was quite clear, the data can be non-IID for several reasons :

-

Non-identical client distributions: This is the case when data distributions from several clients do not follow the same probability distribution. The difference in probability distributions can be due to several factors:

1.1. Feature distribution skew: When data features of several clients follow different probability distributions. This case is typical for personal data, such as handwritten digits.

1.2. Label distribution skew: When label distribution varies across different clients. This kind of skew is typical for area-dependent data (species existing in a certain place).

1.3. Concept shift: When data features with the same label differ across different clients (same features, different label), i.e., due to cultural differences or when labels from data with the same features differ across different clients (same label, different features), i.e., due to personal preferences.

1.4. Unbalancedness: It is common for the amount of data to vary significantly between clients.

-

Non-independent client distributions: When the distribution of data from some clients somehow depends on the distribution of data from another. For example, cross-device FL experiments are performed at night, local time, which causes geographic bias in the data.

-

Non-identical and non-independent distributions: In real FL scenarios, data may be non-IID for several reasons simultaneously, due to the particular nature of the data source.

As we have explained, the reasons why a dataset may be non-IID are manifold. At the moment, the framework implements label distribution skew. For each client, we randomly choose the number of labels it knows and which ones they are. We show the labels known by each client.

In this case, the options available are the same and have the same meaning as in the IID sampling. According to the sampling parameter, when sampling = 'without_replacement', due to non-IID restrictions (clients with a reduced number of known labels), it is possible that some clients will receive less data than specified by the weights parameter, due to the lack of data from a certain label. This is also possible when sampling = 'with_replacement', but is less likely, due to the fact that we can reuse data from some labels. It will only occur if the amount of data assigned to a client is greather than the total amount of data from the labels.

Here, we show the difference of amount of data of each client with and without replacement sampling option:

non_iid_distribution = NonIidDataDistribution(database)

nodes_federation, test_data, test_label = non_iid_distribution.get_nodes_federation(num_nodes=3, percent = 100,

weights=[0.2,0.3,0.2])

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);Number of instances per client:

48000

72000

48000non_iid_distribution = NonIidDataDistribution(database)

nodes_federation, test_data, test_label = non_iid_distribution.get_nodes_federation(num_nodes=3, percent = 100,

weights=[0.2,0.3,0.2],

sampling="with_replacement")

print("Number of instances per client:")

nodes_federation.apply_data_transformation(number_of_instances);Number of instances per client:

48000

72000

48000Let's see the known labels for each client, in order to show the label distribution skew that could possibly occur.

print("Known labels per client:")

nodes_federation.apply_data_transformation(unique_labels);Known labels per client:

[0 1 2 3 5 6 7 8]

[1 4 5 7]

[0 2]It is clear that this distribution of labels could represent a real life scenario. Not having some labels is a problem on a local scenario, as the local models will not be able to learn to differentiate between the owned labels and a new case that does not match these labels. In this way, horizontal Federated Learning can be used to tacle these differences and learn from all the parties, reducing the impact of label skew in this case.