Federated models: regression using the California housing database

In this notebook, we explain how you can use a federated learning environment to create a regression model using a neural network from the TensorFlow framework. The steps mainly differ on how the model is created, as the federated section is similar.

Index

1) The data

First, we load a dataset (included in the framework) to allow for regression experiments.

import shfl

from shfl.data_base.california_housing import CaliforniaHousing

from shfl.private.federated_operation import NodesFederation

from shfl.auxiliar_functions_for_notebooks.functionsFL import *

database = CaliforniaHousing()

train_data, train_labels, test_data, test_labels = database.load_data()2022-03-24 18:11:03.715545: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-03-24 18:11:03.715563: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.Now, we are going to explore the data:

print("Shape of train_data: " + str(train_data.shape))

print("Shape of train_labels: " + str(train_labels.shape))

print("One sample features: " + str(train_data[0]))

print("One sample label: " + str(train_labels[0]))Shape of train_data: (16512, 8)

Shape of train_labels: (16512,)

One sample features: [ 2.62500000e+00 3.10000000e+01 4.41666667e+00 9.34523810e-01

1.16300000e+03 3.46130952e+00 3.79600000e+01 -1.21220000e+02]

One sample label: 0.7212) The model

Instead of using a linear regression from other frameworks, we can establish a neural network that acts as a linear regression. This is achieved by not inserting more layers that would transform it into a non-linear model. The input corresponds to the features, and the single output to the prediction we want to achieve.

import tensorflow as tf

def model_builder():

# create model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(8, input_dim=8, kernel_initializer='normal', activation='relu'))

model.add(tf.keras.layers.Dense(1, kernel_initializer='normal'))

# Define configuration

loss = tf.keras.losses.MeanSquaredError()

optimizer = tf.keras.optimizers.Adam()

metrics = [tf.keras.metrics.RootMeanSquaredError()]

return shfl.model.DeepLearningModel(model=model, loss=loss, optimizer=optimizer, metrics=metrics)3) Run the federated learning experiment

We define the federated environment:

iid_distribution = shfl.data_distribution.IidDataDistribution(database)

nodes_federation, test_data, test_label = iid_distribution.get_nodes_federation(num_nodes=5, percent=30) #20 #10

aggregator = shfl.federated_aggregator.FedAvgAggregator()

federated_government = shfl.federated_government.FederatedGovernment(model_builder(), nodes_federation, aggregator)2022-03-24 18:11:06.082605: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2022-03-24 18:11:06.082668: W tensorflow/stream_executor/cuda/cuda_driver.cc:269] failed call to cuInit: UNKNOWN ERROR (303)

2022-03-24 18:11:06.082713: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (SH-083-WS): /proc/driver/nvidia/version does not exist

2022-03-24 18:11:06.083243: I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.And we reshape the data which the nodes are going to use in order to train:

import numpy as np

def reshape_data(labeled_data):

labeled_data.label = np.reshape(labeled_data.label, (labeled_data.label.shape[0], 1))

nodes_federation.apply_data_transformation(reshape_data);test_label = np.reshape(test_label, (test_label.shape[0], 1))

federated_government.run_rounds(3, test_data, test_label)Evaluation in round 0:

Collaborative model test -> loss: 6.623020172119141 root_mean_squared_error: 3.520354986190796

Evaluation in round 1:

Collaborative model test -> loss: 1.4393712282180786 root_mean_squared_error: 2.651242256164551

Evaluation in round 2:

Collaborative model test -> loss: 1.337330937385559 root_mean_squared_error: 2.267554998397827 4) Add differential privacy

We wish to add Differential Privacy to our federated learning experiment, and assess its effect on the quality of the global model. In the following, it is shown how to perform that by easy steps using Sherpa.ai framework. As shown below, by selecting a sensitivity we are ready to run the private federated experiment using the desired differential privacy mechanism.

4.1) Model's sensitivity

We will apply the Laplace mechanism, employing a fixed sensitivity for the model. Intuitively, the model's sensitivity is defined as the maximum change in the output when one single training data is changed or removed. The choice of the sensitivity is critical since it determines the amount of noise applied to the data, and thus excessive distortion might result in an unusable model. We can sample model's sensitivity using the functionality provided by the framework:

from shfl.differential_privacy import SensitivitySampler

from shfl.differential_privacy import L1SensitivityNorm

class UniformDistribution(shfl.differential_privacy.ProbabilityDistribution):

"""

Implement Uniform sampling over the data

"""

def __init__(self, sample_data):

self._sample_data = sample_data

def sample(self, sample_size):

row_indices = np.random.randint(low=0, high=self._sample_data.shape[0], size=sample_size, dtype='l')

return self._sample_data[row_indices, :]

class DeepLearningSample(shfl.model.DeepLearningModel):

"""

Adds the "get" method to model's class

"""

def __call__(self, data_array):

data = data_array[:, 0:-1]

labels = data_array[:, -1].reshape(-1,1)

train_model = self.train(data, labels)

return self.get_model_params()

def model_builder_sample():

# create model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(8, input_dim=8, kernel_initializer='normal', activation='relu'))

model.add(tf.keras.layers.Dense(1, kernel_initializer='normal'))

# Define configuration

loss = tf.keras.losses.MeanSquaredError()

optimizer = tf.keras.optimizers.Adam()

metrics = [tf.keras.metrics.RootMeanSquaredError()]

return DeepLearningSample(model=model, loss=loss, optimizer=optimizer, metrics=metrics)

class L1SensitivityNormLists(L1SensitivityNorm):

"""

Implements the L1 norm of the difference between lists of parameters x_1 and x_2

"""

def compute(self, x_1, x_2):

x = []

for x_1_i, x_2_i in zip(x_1, x_2):

x.append(np.sum(np.abs(x_1_i - x_2_i)))

return np.max(x) # This could be allowed to be an array

sample_data = np.hstack((train_data, train_labels.reshape(-1,1)))

distribution = UniformDistribution(sample_data)

sampler = SensitivitySampler()

n_data_size = 100

max_sensitivity, mean_sensitivity = sampler.sample_sensitivity(

model_builder_sample(),

L1SensitivityNormLists(), distribution, n_data_size=n_data_size, gamma=0.05)

print("Max sensitivity from sampling: " + str(max_sensitivity))

print("Mean sensitivity from sampling: " + str(mean_sensitivity))Max sensitivity from sampling: 0.21553615

Mean sensitivity from sampling: 0.0198137634.2) Run the federated learning experiment with differential privacy

The Laplace mechanism provided by the Sherpa.ai Federated Learning and Differential Privacy Framework is then assigned as the private access type to the model parameters of each client in a new FederatedGovernment object.

This results in an -differentially private FL model.

For example, by choosing the value , we can run the FL experiment with DP:

from shfl.differential_privacy import LaplaceMechanism

params_access_definition = LaplaceMechanism(sensitivity=mean_sensitivity, epsilon=0.5)

nodes_federation.configure_model_params_access(params_access_definition)

federated_governmentDP = shfl.federated_government.FederatedGovernment(

model_builder(), nodes_federation, aggregator)

federated_governmentDP.run_rounds(3, test_data, test_label)Evaluation in round 0:

Collaborative model test -> loss: 50.764610290527344 root_mean_squared_error: 3.412365436553955

Evaluation in round 1:

Collaborative model test -> loss: 24.632835388183594 root_mean_squared_error: 3.1394474506378174

Evaluation in round 2:

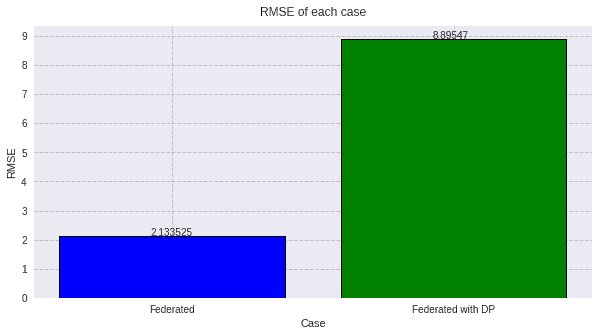

Collaborative model test -> loss: 352.1905517578125 root_mean_squared_error: 4.906912326812744 4.3) Comparing the metrics

Now that we have all the models, let's do the metrics calculations:

from shfl.auxiliar_functions_for_notebooks.functionsFL import *

import matplotlib.pyplot as plt

train_labels = np.reshape(train_labels, (train_labels.shape[0], 1))

fed_model = federated_government._server._model

evaluation_fed = fed_model.evaluate(train_data, train_labels)

dp_model = federated_governmentDP._server._model

evaluation_dp = dp_model.evaluate(train_data, train_labels)We are evaluating the Root Mean Squared Error and plotting it from both models:

values=[round(evaluation_fed[1][1], 6), round(evaluation_dp[1][1], 6)]

titles=['Federated', 'Federated with DP']

colors=['blue', 'green']

plot_all_RMSE(values, titles, colors, 10)

It is clear that the error increases when adding Differential Privacy, simple linear regressions are susceptible to slight changes.