Federated models: TAN TM algorithm

In this notebook, we explain how you can use a federated learning environment to create a TAN TM algorithm for a Bayesian classifier, which conducts a discriminative learning of the network parameters in order to maximize the conditional log-likelihood.

The data

First, we load the libraries and we specify gloabl variables for the federated training environment and the model.

import shfl

import numpy as np

import pandas as pd

from shfl.model.ttm_bin_model import TTM01Algorithm

from shfl.auxiliar_functions_for_notebooks.functionsFL import *

from shfl.data_base.data_base import WrapLabeledDatabase

from shfl.federated_government.federated_government import FederatedGovernment

from shfl.private.federated_operation import ServerDataNode

from shfl.private.data import DPDataAccessDefinition

from shfl.private.reproducibility import Reproducibility

Reproducibility(567)2022-04-07 14:19:18.687517: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2022-04-07 14:19:18.687550: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

<shfl.private.reproducibility.Reproducibility at 0x7f9e3539b550>Now we load the data from the file, containing binary features and a final label.

# Load data

df = pd.read_csv("data/database_cpf2_hard_noiid.csv", sep = get_delimiter("data/database_cpf2_hard_noiid.csv"), header=None)Let's take a look at the data available.

df.head()| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ... | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | ... | 0 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 |

| 1 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | ... | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 1 |

| 2 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | ... | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 |

| 3 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 0 | 0 | ... | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 |

| 4 | 0 | 1 | 1 | 0 | 1 | 0 | 1 | 1 | 0 | 0 | ... | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 1 | 0 |

5 rows × 21 columns

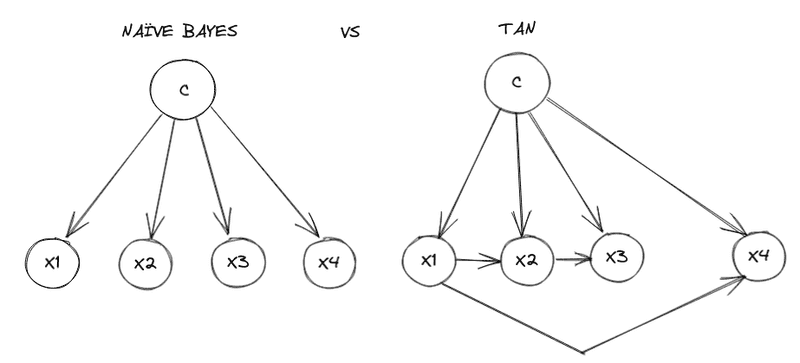

For the TAN algorithm, we define the dependencies graph, where features are related with each other. While in the Naïve Bayes algorithm variables are independent, here we add custom dependencies in order to extend structural constraints.

Let's load the structure we want to use:

structure = pd.read_csv("data/structure.csv",

sep = get_delimiter("data/structure.csv"),

names=["Pa1", "Pa2"],

header=None)The format in which the structure is specified consists on 2 columns, specifying the first parent as the predictive variable (or label class) and the second one being the parent node (dependant variable) on the graph:

structure.head()| Pa1 | Pa2 | |

|---|---|---|

| 1 | C | |

| 2 | C | X1 |

| 3 | C | X8 |

| 4 | C | X3 |

| 5 | C | X6 |

Before operating on the dataset, we extract the number of features in order to later configure the model.

n_features = len(df.columns) - 1Now, in order to simulate a federated scenario, we need to distribute the data into the nodes. We are using the WrapLabeledDatabase class in order to wrap the data and the labels in a format compatible with the tool. This class also allows us to divide the global data into a global train and test for experimentation purposes.

df = df.to_numpy()

grouped_data = np.delete(df, -1, axis=1)

grouped_labels = df[:, -1]

database = WrapLabeledDatabase(grouped_data,grouped_labels)

_, _, test_data, test_labels = database.load_data()To finish the distribution, we divide the data in an iid way between the nodes, and we apply an internal split of train and test to locally evaluate their data with the model they are training with.

iid_distribution = shfl.data_distribution.IidDataDistribution(database)

nodes_federation, test_data, test_labels = iid_distribution.get_nodes_federation(num_nodes=3, percent=100)

nodes_federation.split_train_test(0.7);The model

Next, we define the model_builder() function to create an instance of the NB algorithm.

By the implementation below, we only need to define the number of features of the dataset, previously calculated:

def model_builder():

model = TTM01Algorithm(n_features,t_max=1, structure=structure) # t_max=t_max

return modelRun the federated learning experiment

After defining the data and the model, we are ready to run our model in a federated configuration. le'ts define the needed components and run the training.

aggregator = shfl.federated_aggregator.FedAvgAggregator()

federated_government = FederatedGovernment(model_builder(), nodes_federation, aggregator)

federated_government.run_rounds(n_rounds=2, test_data=test_data, test_label=test_labels)Evaluation in round 0:

########################################

Node 0:

-> Global test accuracy:0.8815968230745115

-> Local accuracy:0.872893533638145

Node 1:

-> Global test accuracy:0.8801860173476852

-> Local accuracy:0.8798171129980406

Node 2:

-> Global test accuracy:0.8841049221444247

-> Local accuracy:0.8786414108425865

########################################

Collaborative model test -> 0.8818580833942941

Evaluation in round 1:

########################################

Node 0:

-> Global test accuracy:0.8810220503709897

-> Local accuracy:0.8731548007838015

Node 1:

-> Global test accuracy:0.8808652941791201

-> Local accuracy:0.8802090137165252

Node 2:

-> Global test accuracy:0.8871355418539032

-> Local accuracy:0.882560418027433

########################################

Collaborative model test -> 0.8826941164175985